Foundations of Programming Languages: A TA's Perspective (Part 1/2)

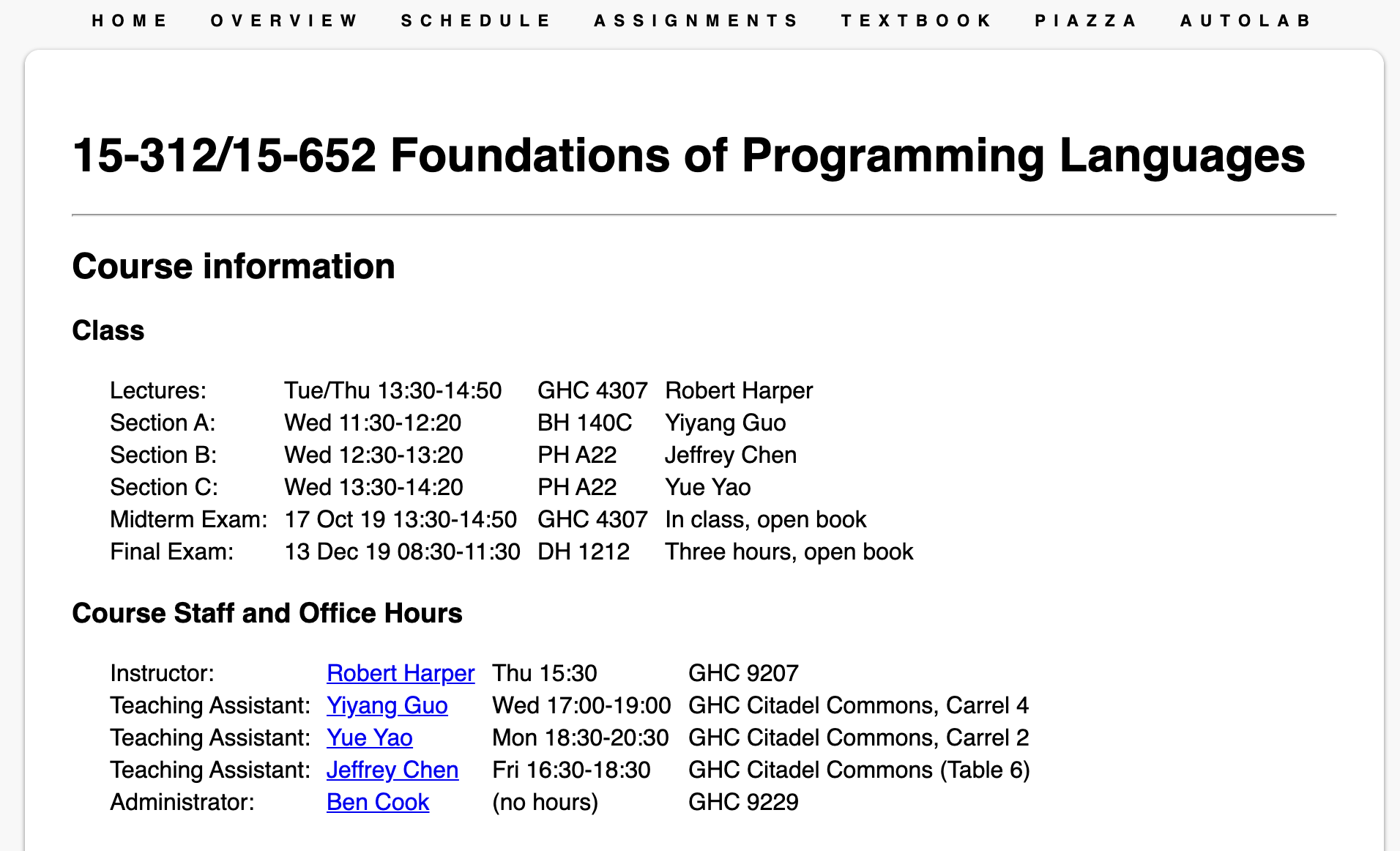

Fall 2019 instance of "Foundations of Programming Languages"

Fall 2019 instance of "Foundations of Programming Languages"

In the fall semester of 2019, I had the opportunity to work as a teaching assistant for the course 15-312/15-652 Foundations of Programming Languages, taught by Bob Harper. Today we wrapped up (almost) all of the work. As I have always said, the best way to build clear insight into a subject is perhaps by teaching and explaining it to the others. On the other hand, it was quite a unique experience. In addition to the usual grading and recitation, I invented a few homework problems (the DFA problem in HW2 and the entire KPCF problems) and wrote a few exam questions. There are a few things that went pretty well, and honestly some things just don’t quite go well as we would hope for. For the purpose of this post, I don’t want to (and honestly can’t) go into too much details, but focus on mainly 3 things: the philosophy behind this course, one unfortunate situation, and what it feels like working with Bob.

This is the first part of a two-part post, where I mainly talk about the philosophy of this course, as I understand it. In other words, I seek to answer the question of why study programming languages with my own experience.

The Course & its Philosophy

What should a course on programming languages be about, and why or why not should one take it? The question is closely related to another question, which is “Why Study Programming Languages”, a question that has been answered pretty neatly. Yet those two questions are distinct in that understanding the importance of studying programming languages does not immediately shed light on how we should teach it. In particular, one must further make design choices regarding what content to present, in what manner, and what are the conclusions we want to put emphasize on. In other words, motivating the study of a particular field is about asking the right questions, while teaching a topic is perhaps more about providing solutions.

Much of the study of natural sciences are devoted to answering the question of what is a particular subject, be that physics, computers, and in our cases, programming languages. The answers to this type of questions usually come in two forms: eliminatism ones and the holism ones. (I am not an expert on scientific philosophy, and I could be misusing terminologies.) Eliminative approaches explain things by providing an alternative world where the phenomenons can be derived from other first principals. For example: “There is no light, but merely electromagnetic waves of particular wavelengths”. The eliminative approach has been very successful in natural sciences. Eliminating the idea of light and be in favor of the broader notion of electromagnetic waves, we can make better predictions for the behavior of light itself. On the other hand, holism explanations are direct characterizations of the phenomenons themselves. For example, trying to understand chemical reactions by establishing rules for different types of reaction, instead of trying to derive everything from quantum physics, will be a holism approach.

It must be stated unequivocally that both approaches are essential and effective. The effectiveness of the eliminative approach has been proved over and over by advancement in Physics. It has been so effective that some even argue that it is possible for us to understand the entire universe if we just start from the first principals of Physics. A famous 1972 article More Is Different by P. W. Anderson counters this point nicely. It emphasizes a simple fact: science itself is hierarchical, and laws of nature have to be identified for each hierarchy. Understanding the components making up the system does not necessarily provide sufficient insight into the behavior of the system itself.

We have already seen a lot of examples in computer science. We have perfect understanding on how each neuron works in deep neural networks, and we can even derive an equation that is sufficient to describe the behavior of the entire network, yet we still don’t understand how it works. Same story for concurrent data structures, even though we understand how the hardware operates in every detail, we have a hard time trying to derive the theoretical performance bounds. Both examples suggests when a system scales in quantity, new observations have to be made in order to understand the system as a whole [5].

I think it’s important to remind people that the “more is different” idea applies to Computer Science as well, and especially to the study of programming languages. When people study computers, it is extremely easy for us to take a full-blown eliminative view and forgot about the holism approach entirely. Part of the reason is that computers are man made objects, and there doesn’t seem to be need to build a holism view if we understand how they are built. This is especially true in terms of programming languages. In many universities, the study of programming languages are very often conflated with the study of compilers, and we very often teach programming languages by explaining it in terms of machine behaviors or other languages. Case in point includes teaching people pointers using “address spaces” and telling students variables of objects in Java are “just pointers” [1], objects are just “structs associated with functions”, and most infamously recursion is about a “stack”. This method quickly breaks down when we try to introduces to people ideas like coroutines and continuations. The implementation for both mechanism are messy and complicated, and learning about them does not shed light on how to properly work with them! If we take this further, you immediately reach conclusion that “all turing complete languages are essentially same because they can simulate each other”, which is a very degenerative view.

It’s true that computers and programming languages are both man made, and are constructed in a level by level manner. However, Anderson’s observation still applies. By composing more and more components into one new thing, we lose the ability to reason about the things we built through analysis of its components, and new laws have to be found for this new thing we just created. In fact, I believe its fair to say that the study of computer science brings new meaning to Anderson’s argument. In stead of having to discover principals for whatever nature throw at us, in computer science we have the ability to choose the principals governing higher hierarchies by building abstractions. Instead of having to live with a fixed lower hierarchies (e.g., we can’t change the laws of physics that governs chemistry), we get to design different implementations for the same abstraction.

Many CS courses often only offers an eliminative perspective. Many books [2] in PL puts way too much emphasize on the eliminative perspective of programming languages, often discuss languages in terms of their “most popular” implementations, and treats language features as syntatic sugars. This course aims to deliver something unique. We wish to teach people a way to first talk about different languages in terms of the abstractions. Using those tools, we could compare and evaluate language designs according to the abstraction they aim to provide, instead of a specific implementation of their compilers/interpreters.

In other words, we hope to introduce to the students the power of formalisms, that is, the ability to formalize languages in a way that does not rely on any specific implementation (spoiler alert: through the lens of types). Those formalisms not only allow us to make sense of the language designs (and identifies language designs that do not make sense), we also discover that the same set of formalisms happen to capture much of what it means to compute, and exposes that there exists different types of computations [3]. Those goals are achieved by exposing students to multiple toy languages, each with a different feature. For each of those toy languages, we demonstrate how do we formalize the feature, and discuss various designs. In other words, this course discuss the act of making abstractions itself [4].

The unique thing about programming languages is that, programming languages design is very often a topic of public discussion. Everyone with some experience of programming, at some point, talks about one’s favorite language / feature [6]. People fight, and organize around choices of programming languages / language designs. And, as you would imagine, most of the claims, especially those made by Key Opinion Leaders on the Internet, instead of scholars, doesn’t make sense once you try to put it in concrete terms using formalisms. In other words, one’s understanding about programming languages, will be under constant attack by all sorts of nonsense. It’s important for one to be able to hold his/her ground in the face of other unsound claims, and to respond to those claims with logical and sound arguments. Because otherwise the things we advocated will not be able to stick for very long. This again puts more emphasis on the power of formalisms: personal tastes and cults of programming practices won’t stand the test of time [7], because they are justified by the situations. Only rock solid and crystal clear reasoning (i.e. theorems) through formalisms will.

Working with Bob

Finally I want to say something about working with Bob. As a disclaimer, I did ask Bob to sign my copy of PFPL, so the conflict of interest is obvious, and you should definitely treat whatever I got to say next as total trash 😃.

Bob is the the principled type: those people act according to principles, even if those principles potentially lead them to practically awkward situations. In particular, arguments of practicality will almost always be rejected. Things like “we should do this or that because they are the easy work around” are rejected outright: Bob believe it’s simply not worthwhile to trade what’s right for the temporary expediency because you will ends up paying more. Now I don’t totally agree with this point of view, but he has a point. Still remember the billion-dollar mistake of null-pointers committed by Tony Hoare? For the educator’s point of view, Bob believe that it’s most beneficial to teach students the right way, and leaving the “adapting to an imperfect industry solution” part to the job. Again, I think it probably is the right general idea, but things should be taken care of case by case.

On a second note, Bob always tries to present the version of himself. In some cases, he basically apologizes for being not perfect, or not being on his A-game. I genuinely don’t know what to say, because there is no way that I’m holding myself to such high standards. As a result, when you work with Bob, be prepared to be corrected publicly because 1) the instruction team has the absolute responsibility to provide student with unequivocally right ideas, no matter the cost 2) it’s understandable that all humans make mistakes / confuses (conflates) things. I got the honor to be publicly corrected by Bob on Piazza twice. If any of the reader got themselves into a similar situation, my suggestion is to keep calm: there’s nothing personal, Bob don’t question your job performance, and it’s always worthwhile to go to his office to clear things up for your own good.

It has been a very interesting journey working with Bob. 15-312 has been offered for almost 2 decades and the course is still under significant development. As a TA, you have the opportunity to write new homeworks and discuss your work with Bob. Since Bob genuinely care about the learning experience of students, your efforts will not go unnoticed.

Footnotes:

[1] In fact, we don’t really have a better way to teach them because the languages are flawed once you start to discuss the abstraction they try to provide. This is particularly true for languages like C. If you start teaching students C as some macro for assembly, then there is no way one can explain recursion without mentioning a stack, because dynamically “substituting a value into the function body” sounds much more absurd. That is one more reason against arbitrarity in language design, because they create popular languages that are super hard to teach!

[2] Yes, I’m thinking about “Programming Language Pragmatics”.

[3] Trying to argue that all computations can be viewed as actions of a universal Turing Machine also falls into the eliminative argument.

[4] To be fair, this course also take the eliminative approach from time to time. One example is that in the discussion of Dynamically Typed languages we are argued that dynamically typed languages are really uni-typed. Another example being “by name languages are just by-value languages with support for computation types”. One could argue this type of arguments goes against our own philosophy. Well, my defense would be the following: we only do this when we hope to show that the added structure does not provide enough benefit (if not being actually harmful) to motivate the design.

[5] For the same reason I believe machine learning methods right now targeting automated code synthesis / reasoning won’t work very well, because most of the methods relies on treating the language as a linguistic model. This might be fine for natural languages because people don’t really embed sophisticated logic structure in to a few sentences (because our brain are poor at keeping track of the logic!), but that’s not the case for programming languages. One must respect that programs have a rich, high-order structure which involves careful reasoning.

[6] I do. I do it a lot because Standard ML is really good, especially because of its visible compiler.

[7] A moment ago we were still talking about Object Oriented Programming and Design Patterns, then it’s Aspect Oriented Programming, followed by Model-View-Controllers (and derivatives like MVVM). If you haven’t heard of any of them, then it proves my point exactly.